Artificial Intelligence and the Environment: Unveiling the Hidden Costs and Sustainable Solutions

Artificial Intelligence (AI) has revolutionized nearly every aspect of modern life—from personalized digital assistants to breakthroughs in medicine and transportation. However, beneath the surface of this technological marvel lies a growing environmental cost that often goes unnoticed. AI’s rapid expansion depends on immense computational power, much of which is driven by energy-intensive data centers. These facilities not only strain global energy grids but also contribute to water scarcity, electronic waste, and greenhouse gas emissions.

As AI continues to permeate our daily routines, it’s critical to examine how we can support its growth without compromising the planet. This article explores the environmental toll of AI, its less-visible consequences, and the sustainable practices that can guide us toward a greener digital future.

The Rise of AI: A Brief History

AI’s origins trace back to the 1950s, when visionaries like Alan Turing and John McCarthy began exploring the idea of machines mimicking human intelligence. While early developments were limited, they laid the foundation for today’s sophisticated machine learning and neural network technologies.

The 1990s and early 2000s marked a turning point, as faster internet speeds and cheaper storage enabled researchers to collect and process vast amounts of data. This, combined with advances in parallel computing and the rise of cloud infrastructure, propelled AI into mainstream applications across industries such as healthcare, finance, and autonomous vehicles.

AI’s Expanding Footprint

Today, AI is embedded in everything from search engines and recommendation systems to self-driving cars and advanced medical diagnostics. According to Gartner, global spending on AI software is expected to exceed $300 billion by 2027. However, this growth comes at a steep environmental cost.

Training large AI models requires vast computational resources. A study by the University of Massachusetts Amherst revealed that training a single deep learning model can emit as much carbon dioxide as five cars over their entire lifetimes. As companies race to develop more powerful models, the environmental footprint of AI continues to grow.

Key Environmental Challenges of AI

1. Energy Consumption in Data Centers

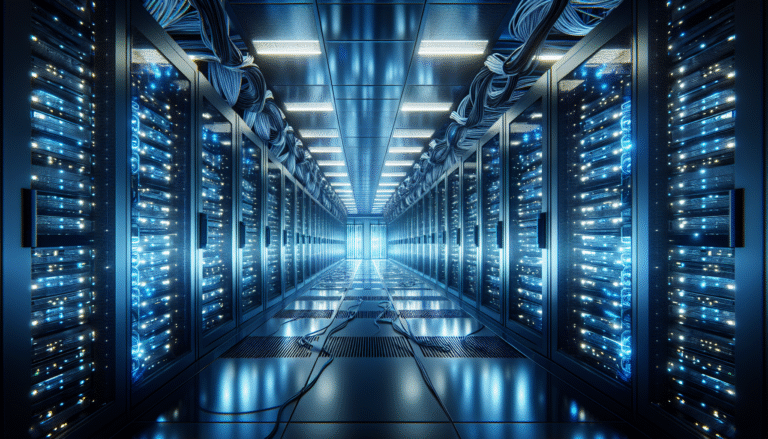

Data centers are the backbone of AI infrastructure, running 24/7 to support model training and real-time inference. These facilities consume enormous amounts of electricity, much of it for cooling the servers. The International Energy Agency estimates that data centers currently account for around 1% of global electricity use—a number expected to climb as AI adoption increases.

Training a single large AI model can use as much energy as several homes consume in a year, underscoring the need for more efficient computing practices.

2. Carbon Emissions and Fossil Fuel Dependency

Many data centers still rely on fossil fuels for power. In the U.S., data centers were responsible for approximately 2.18% of national CO₂ emissions in 2023—three times more than in 2018. Despite pledges from tech giants to transition to renewable energy, much of the infrastructure remains tied to non-renewable sources.

This reliance not only contributes to climate change but also affects public health. Pollution from data centers has led to an estimated $5.4 billion in health-related costs in recent years.

3. Rare Earth Metals and E-Waste

AI hardware—particularly GPUs and specialized chips—requires rare earth elements such as neodymium and praseodymium. Mining these metals causes deforestation, water contamination, and the release of toxic substances.

Additionally, as AI hardware evolves rapidly, outdated devices are discarded, contributing to a growing e-waste crisis. By 2030, generative AI alone could generate between 1.2 and 5 million metric tons of e-waste if current trends continue.

4. Water Usage for Cooling

Data centers also consume vast amounts of water to cool their servers. On average, a single facility can use up to 300,000 gallons of water per day—equivalent to the daily usage of 1,000 homes.

In regions like Northern Virginia’s “Data Center Alley,” this demand has intensified water scarcity. In 2023 alone, the area’s data centers consumed 1.85 billion gallons of water, raising concerns about sustainability in drought-prone regions.

5. Indirect Energy Use Through Network Infrastructure

AI’s environmental impact extends beyond data centers. Every time a user interacts with an AI system—whether through a chatbot or a recommendation engine—data travels through a network of routers, switches, and servers, all of which consume energy.

For example, a single ChatGPT query may use about 0.0025 kWh of electricity. While small individually, the cumulative impact of millions of daily queries is significant.

Unseen Consequences: Social and Local Impacts

1. Lack of Regulatory Oversight

In the U.S., there is currently no federal mandate requiring companies to report the energy usage or emissions associated with AI. Most tech firms self-regulate based on internal sustainability goals. While some government initiatives promote energy efficiency, enforce