Do you want to improve your WooCommerce SEO but don’t know where to start?

For those in the eCommerce space, running a WooCommerce store is just the beginning; optimizing it for search engines is the key to making your store stand out.

With so much competition online, it’s vital to take steps that make your products easy to find. WooCommerce SEO is all about implementing a comprehensive strategy to drive traffic, increase conversions, and grow your business.

Understanding the unique challenges of WooCommerce store owners, this guide simplifies WooCommerce SEO, making it accessible even for beginners. By following these strategies, you’ll position your online store for success.

hide

What is WooCommerce?

WooCommerce is a useful plugin that transforms your WordPress website into an eCommerce platform. With it, you can sell products, manage payments, and even handle shipping directly on your site.

The platform has over 5 million users because of its flexibility and ease of use. You can sell anything, from physical goods to digital downloads or services.

And because WooCommerce is open-source, developers from around the world can continuously contribute to its features and extensions. This makes WooCommerce customizable and scalable for businesses of all sizes.

Getting started with WooCommerce is straightforward. Once installed, you can already add necessary eCommerce website features without needing advanced technical skills. The platform even provides tools for tracking inventory and viewing detailed sales reports.

When paired with WooCommerce SEO strategies, this plugin becomes even more powerful. It lets you optimize your store for search engines so customers can easily find your products online.

What is WooCommerce SEO?

You may already be familiar with the general rules of SEO, but what made WooCommerce SEO different?

Unlike general SEO, WooCommerce SEO focuses on the unique needs of e-commerce stores. For example, it involves optimizing product pages with detailed descriptions and keywords. It also includes managing customer reviews and ensuring products are indexed correctly for search engines.

WooCommerce SEO also emphasizes technical aspects such as improving your website’s speed, ensuring mobile-friendliness, and creating a simple navigation structure are key tasks.

Why is WooCommerce SEO important?

- Visibility in a Crowded Market: SEO helps your store appear on the first page of search results, placing you directly in front of potential customers.

- Targeted Traffic: Proper optimization attracts the right audience—shoppers actively searching for your products.

- Enhanced User Experience: SEO best practices, like fast-loading pages and mobile-friendly designs, create a seamless shopping experience.

- Long-Term Growth: Unlike paid ads, SEO efforts compound over time, driving consistent organic traffic and sales.

Now that we’ve covered what WooCommerce SEO is and its importance, let’s dive into the steps to make your online store stand out among the rest.

Preparing Your WooCommerce Store for SEO

Choosing a Reliable Hosting Provider

When it comes to WooCommerce SEO, your web host directly affects your site’s performance, speed, and security, all of which are critical for search engine rankings. In short, a good hosting provider makes your store optimized for search engine and customer needs.

Search engines like Google prioritize fast-loading websites, and a quality host helps achieve this. Features like SSD storage and built-in caching can significantly enhance your store’s speed.

Uptime reliability is another key consideration. A store that is frequently down loses potential sales and search engine trust. Reliable hosting providers guarantee at least 99.9% uptime so your store is always available.

Security is equally important when choosing a hosting provider. A secure website builds trust with your customers and protects their data. To help safeguard your store while boosting its SEO potential, look for hosting services that include SSL certificates, daily backups, and malware protection.

As an example, GreenGeeks offers tailored WooCommerce hosting with fast speeds, solid uptime, and robust security. We provide advanced features like SSD storage, free SSL certificates, and 24/7 expert support. With eco-friendly practices and reliable performance, GreenGeeks helps lay a strong SEO foundation for your online store.

Selecting an SEO-Friendly WooCommerce Theme

A good theme is the foundation of a high-performing website. It influences how fast your pages load and how easily search engines can index your content. Themes with clean, efficient code are a clear winner. They help your site run faster and reduce potential SEO issues.

Responsive design is another key factor to consider. Your theme should work seamlessly on all devices, especially mobile phones. With Google’s mobile-first indexing, a site that isn’t mobile-friendly will struggle to rank well. A responsive theme makes your online store look great and performs smoothly on any screen size.

Speed is also a critical aspect of WooCommerce SEO. A lightweight theme optimized for fast loading reduces bounce rates. Search engines also favor faster sites, so investing in a theme with built-in speed optimization is always a wise choice.

Additionally, look for features like schema markup and breadcrumb navigation. These tools help search engines understand your site’s structure and content better. As a result, you can gain enhanced search snippets and improved visibility. These features also make it easier for customers to navigate your site, boosting their shopping experience.

Installing Essential Plugins

To achieve excellent WooCommerce SEO, you need essential plugins that optimize your store and enhance its functionality. Below is a list of must-have plugins and how they contribute to better WooCommerce SEO:

1. Yoast SEO

Yoast SEO is a trusted plugin that helps improve WooCommerce SEO. It offers features like meta descriptions, focus keywords, and readability checks, making it easier to create content that ranks well in search results.

With Yoast SEO, optimizing product pages, blog posts, and category descriptions becomes simple and efficient. This ensures your store follows SEO best practices, increasing visibility to potential buyers. It’s especially valuable for eCommerce businesses competing in crowded markets.

2. All in One Schema Rich Snippets

This plugin helps your store stand out in search results. It adds schema markup to your product pages, allowing search engines to display rich snippets. These can include ratings, prices, and stock status. Rich snippets make your site more visually appealing and informative in search results, increasing click-through rates.

3. WP Rocket

Page speed is a major ranking factor, and WP Rocket optimizes your site for faster loading times. It improves caching, minifies CSS and JavaScript, and optimizes images.

4. Smush

High-quality images are vital for eCommerce but can slow your site down. Smush compresses images without sacrificing quality.

5. Redirection

Managing broken links is essential for SEO. The Redirection plugin helps you fix or redirect 404 errors, preventing search engines from penalizing your site.

6. SEO Optimized Images

This plugin automatically adds ALT text and title attributes to your product images. Search engines use this metadata to understand your content. In effect, this improves your site’s visibility in image search results.

7. Google Analytics for WooCommerce

With this plugin, you can track eCommerce-specific data, such as product performance and sales trends. Combined with SEO insights, this data helps you identify which pages and products need further optimization.

How to Enable SEO Settings on Your WooCommerce Website

To begin, install reliable SEO plugins like Yoast SEO and those mentioned above. These tools offer features to optimize your product pages, site structure, and overall SEO settings. They make managing SEO much easier, even for beginners.

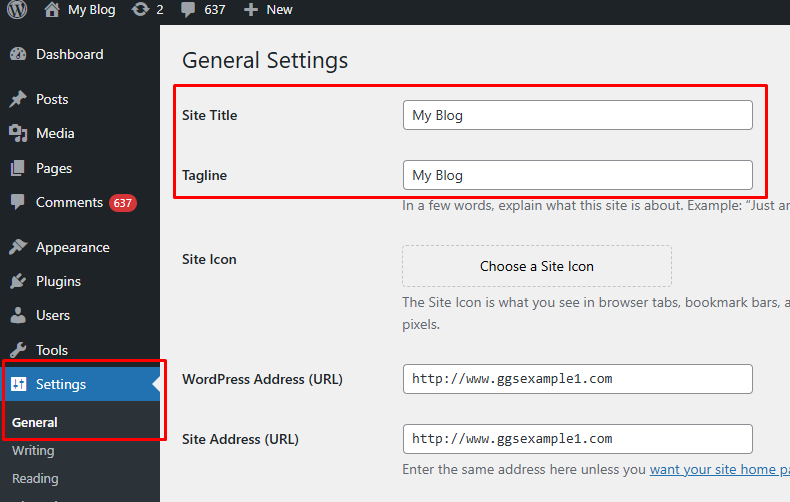

Next, configure your general SEO settings to align with best practices. Start with your site title and tagline under Settings > General in your WordPress dashboard.

Your title should reflect your brand, while the tagline provides a brief description of your store.

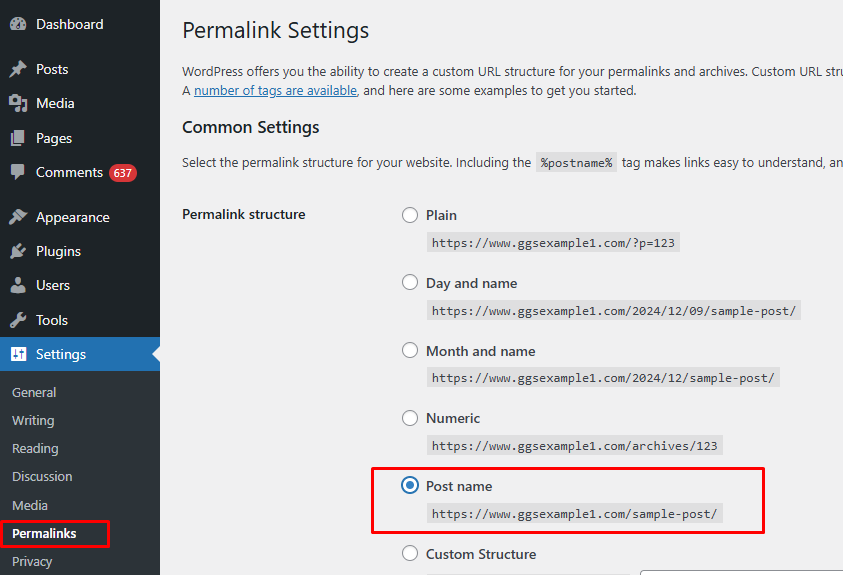

Then, update your permalink structure in Settings > Permalinks to the “Post name” option. This creates clean, keyword-rich URLs that are easy for search engines and users to understand.

Optimizing product pages is another crucial step. You must create unique, descriptive titles for each product incorporating relevant keywords. Write detailed product descriptions that highlight features and benefits.

Don’t forget to add meta descriptions as well. A compelling meta description can encourage more people to click on your product pages from search results.

Organizing your categories and tags is also important. Group your products into clear categories and give each category a short description. Use specific tags to improve navigation and help search engines understand your store better. This makes it easier for users to find related products and enhances your site’s SEO performance.

On-Page SEO for WooCommerce

Keyword Research for eCommerce

An important aspect of WooCommerce SEO is effective keyword research. This process involves identifying the terms potential customers use when searching for products like yours.

The good thing is that several tools can assist in discovering relevant keywords for your products. Platforms like Ahrefs and Semrush offer comprehensive data on search volumes, competition levels, and related terms. These insights help you identify which keywords are worth targeting.

Additionally, free tools like Google Keyword Planner provide valuable information on popular search terms related to your products. Leveraging these tools can help you compile a list of keywords that further boost your sales.

Targeting Long-Tail and Transactional Keywords

In WooCommerce SEO, two types of keywords to note are long-tail and transactional keywords.

Long-tail keywords are specific phrases that, while having lower search volumes, often lead to higher conversion rates due to their specificity. For example, targeting “buy waterproof running shoes” instead of just “running shoes” can attract customers who are ready to purchase.

Transactional keywords indicate a user’s intent to buy, using terms like “buy,” “discount,” or “deal.” Incorporating these into your product descriptions and meta tags can attract high-intent shoppers, ultimately increasing sales.

Optimizing Product Pages

Create Compelling Product Titles with Keywords

Your product title is often the first element that both search engines and customers notice. Because of that, it’s crucial to create unique, descriptive titles that naturally incorporate primary keywords.

For example, instead of a generic title like “Men’s Wallet,” you can use “Men’s Bifold Brown Leather Wallet – Durable and Stylish.” This way, you provide clarity to your buyers while improving relevance in search results.

Write Effective Meta Descriptions

Meta descriptions are brief summaries that appear below your product title in search engine results. They play a significant role in enticing users to click on your link.

Craft compelling meta descriptions that highlight the product’s key features and benefits, incorporating relevant keywords naturally. Building on the previous example, your meta description could be: ‘Discover our Men’s Bifold Brown Leather Wallet, offering durability and style for everyday use.’

Do take note that the optimum length for your meta description should not exceed 160 characters. Beyond that, Google will shorten it by cutting the last part, which will most probably give an incomplete thought to the readers.

Using Headers and Subheaders Properly

Proper use of headers (H1, H2, H3) and subheaders organizes your content, making it more readable for users and understandable for search engines. Each product page should have a single H1 tag, typically the product name.

Subsequent sections can use H2 or H3 tags to outline features, specifications, and customer reviews.

Image Optimization

An entire page full of text is boring, and this is where images give your site a visual boost. However, using unoptimized images on your website can be just as bad, so optimizing images is a must to enhance your WooCommerce SEO.

Here’s how to optimize your product images effectively:

Choosing the Right File Format

Selecting the appropriate image file format balances quality and file size. JPEG is ideal for photographs due to its high compression rates, reducing file size without significant quality loss. PNG suits images requiring transparency or those with fewer colors, offering higher quality but larger file sizes. GIFs are best for simple graphics or animations but are less common for product images.

Compressing Images Without Quality Loss

Large image files can slow down your website, so it’s best to compress images to reduce their file size while maintaining quality. Tools like TinyPNG and ImageOptim can compress images effectively.

Additionally, using next-gen formats like WebP can offer superior compression that further enhances load times.

Adding ALT Text with Relevant Keywords

ALT text provides a textual description of images, aiding both accessibility and SEO. Screen readers use ALT text to describe images to visually impaired users, while search engines use it to understand image content.

Including relevant keywords in ALT text can improve search rankings. To do so, ensure ALT text is descriptive and concise. It should also accurately reflect the image content without keyword stuffing.

URL Optimization

Well-structured, readable URLs can boost your WooCommerce SEO by making it easier for search engines to index your pages and for users to navigate your site.

While customizing URLs, it’s important to avoid certain pitfalls that can negatively impact your site’s performance and SEO. One common mistake is removing base prefixes like /product/ or /product-category/ from your URLs.

Although this might make URLs appear cleaner, it can lead to issues with WordPress’s ability to distinguish between different content types, potentially causing performance problems and duplicate content issues. Therefore, it’s advisable to retain these base prefixes to maintain a clear and functional URL structure.

Content Optimization

In any website, content will always be king, and this applies even to WooCommerce SEO. Here are ways on how you can further optimize your website content.

Write Engaging Product Descriptions

Crafting unique and compelling product descriptions is vital. Instead of using generic or manufacturer-provided text, create descriptions that highlight the unique features and benefits of each product. The main goal is to provide valuable information to potential customers while differentiating your product from competitors.

Incorporating Keywords Naturally

Integrating relevant keywords into your product titles and descriptions is crucial for search engine visibility. However, it’s important to do this naturally to maintain readability.

Avoiding Duplicate Content Issues

Duplicate content can negatively impact your site’s SEO performance, so make sure that each of your product pages has unique content. As a best practice, avoid copying descriptions from other products or manufacturers.

You should also be cautious with similar product information across different pages, as search engines may penalize this duplication. Utilizing tools like Copyscape can help identify and address duplicate content on your website.

Technical SEO for WooCommerce

Improving Site Speed

E-commerce sites experience the highest conversion rates between 1 and 2 seconds of load time, peaking at an average of 3.05% at 1 second and dropping to 0.67% by 4 seconds, with rates falling below 2% afterward.

Here’s how to boost your store’s performance for better WooCommerce SEO:

- Implement Caching: Use caching solutions to store static versions of your pages, allowing for quicker access. Plugins such as WP Rocket facilitate efficient caching.

- Code Minification: Shrink the size of CSS, JavaScript, and HTML files by eliminating extra characters such as spaces, line breaks, and comments.

- Enable GZIP Compression: Compress your website files to decrease their size, leading to faster load times. Many hosting providers offer GZIP compression as a feature.

Why Mobile-First Indexing Matters

Around 60% of the total web traffic globally comes from mobile devices.

With Google’s mobile-first indexing, the mobile version of your website becomes the primary reference for indexing and ranking, highlighting the increasing dominance of mobile internet use.

How to Make Your WooCommerce Store Mobile-Friendly

Start by selecting a responsive, lightweight theme that adapts to all screen sizes. Responsive designs eliminate the need for separate mobile sites.

Accelerated Mobile Pages (AMP) are another excellent way to boost mobile performance. With AMP, your web pages are streamlined using simplified HTML and CSS, enabling faster loading times and reduced data usage.

Navigation for a mobile-friendly store should be intuitive and user-friendly. Use clean menus with minimal options to avoid clutter, and consider a “hamburger menu” for a space-saving solution on smaller screens.

Moreover, you can simplify your checkout pages for easier purchasing. Allow guest checkouts to avoid forcing users to create accounts. Customize the checkout fields with plugins like Checkout Field Editor to make the process faster and easier. You also need to let customers edit their carts directly from the checkout page to reduce cart abandonment.

Lastly, focus on fast page loading speeds by enabling caching, compressing files, and optimizing server response times. Also, make sure buttons and interactive elements are touch-friendly to enhance usability.

Fixing Broken Links

Broken links can frustrate visitors, so you need to eliminate them on your website. Here’s how to address them effectively:

- Use a Broken Link Checker Plugin: Install a plugin like Broken Link Checker, which scans your website for broken links and missing images. It provides a report of all issues and you can address them directly from your WordPress dashboard.

- Leverage Google Search Console: Access Google Search Console and navigate to the ‘Coverage’ section. Here, you’ll find a list of errors, including broken links, that Google has identified on your site.

- Utilize Online Tools: Web-based tools like Ahrefs and SEMrush offer site audit features that can detect broken links. These platforms crawl your site and provide comprehensive reports on link health.

- Implement Redirects for Moved Content: If a page has been moved or its URL changed, set up a 301 redirect to guide both users and search engines to the new location.

- Regular Monitoring: Regularly scan your website for broken links to preserve your SEO efforts. Scheduled audits using the aforementioned tools can help in proactive link management.

Creating and Submitting an XML Sitemap for WooCommerce SEO

An XML sitemap acts as a roadmap for search engines, guiding them to your website’s most important pages, such as products, categories, and blog posts. For WooCommerce stores, a sitemap ensures your entire site is properly indexed.

How to Generate an XML Sitemap

Generating an XML sitemap is simple with SEO plugins like Yoast SEO or Google XML Sitemaps. Here’s how to do it:

1. Choose your preferred plugin, then install and activate it.

2. Navigate to the plugin’s settings and enable the XML sitemap feature.

Once activated, your sitemap will be automatically created and accessible at a URL like www.yourstore.com/sitemap_index.xml.

Tip: Use the plugin’s settings to customize your sitemap. For example, exclude pages like the cart or checkout that don’t need to appear in search results.

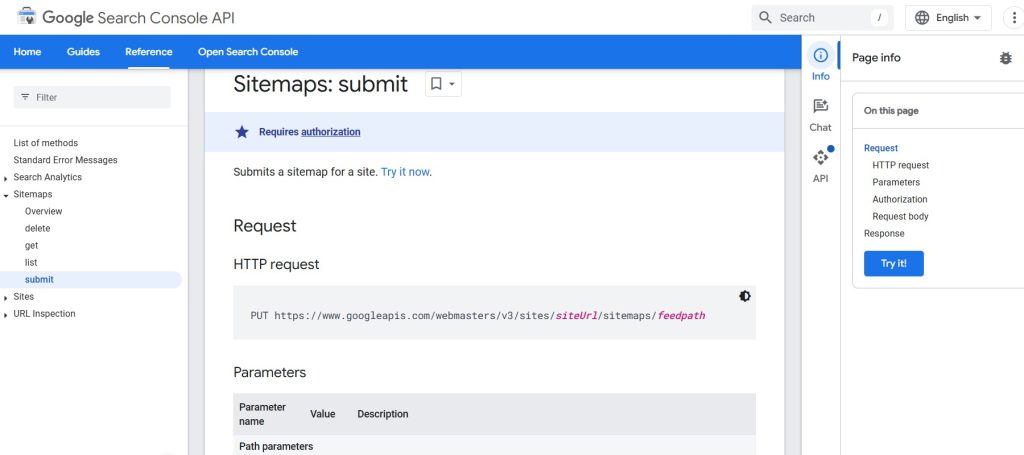

Submitting Your Sitemap to Search Engines

Google Search Console

- Enter sitemap_index.xml in the field provided and click ‘Submit’.

Bing Webmaster Tools

- Log in to Bing Webmaster Tools.

- Select your site from the dashboard.

- Click on ‘Sitemaps’ in the left-hand menu.

- Enter the full URL of your sitemap (e.g., https://www.yourstore.com/sitemap_index.xml) and submit it.

After submitting, check for any errors or warnings and resolve them promptly.

Troubleshooting and Optimization

- Verify Sitemap Functionality: Use tools like Google Search Console’s coverage report to ensure all important pages are indexed.

- Handle Errors: If search engines encounter issues with your sitemap, review the error messages and adjust your sitemap settings in the plugin.

- Update Regularly: When you add new products or pages, your sitemap updates automatically. However, resubmit it periodically to ensure all new content is indexed.

Structured Data for WooCommerce

Structured data is a way to organize information on your WooCommerce website in a format that search engines, like Google, can easily understand.

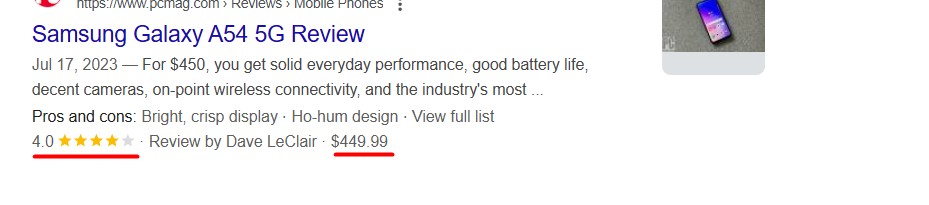

For example, structured data can help highlight key details about your products, like ratings, prices, or stock status, directly in search results. This additional information is often displayed as rich snippets that make your listings more attractive to users.

Rich Snippets for Products and Reviews

In this example, the product price and rating can be spotted easily on the search engine page.

Implementing Schema Markup

Schema markup is a form of structured data that provides context to your website’s content, helping search engines interpret and represent it accurately. To implement schema markup in your WooCommerce store, consider the following steps:

- Choose a Suitable Plugin: Plugins such as Schema Pro or SNIP are designed to simplify the addition of schema markup to your WooCommerce products. These tools offer user-friendly interfaces to manage structured data.

- Configure Product Schema: After installing a structured data plugin like Schema Pro, you need to set it up so it understands your product details. This setup involves matching the information about your products (like name, description, price, and stock) to specific fields the plugin recognizes.

- Validate Your Markup: It’s essential to ensure that your schema markup is correctly implemented. Utilize tools like Google’s Rich Results Test to verify the accuracy of your structured data. Enter your product page URL into the tool to identify and rectify any errors or warnings.

Starting a Blog on Your WooCommerce Store

A blog is a powerful way to consistently share new and relevant content with your audience. Since WordPress is built for blogging, setting up a blog on your store is hassle-free. Listed below are the benefits of adding blogs to your website:

1. Highlight Your Products: A blog lets you showcase how your products solve problems or fit seamlessly into your customers’ lives. Share in-depth product guides, tutorials, or creative ways to use your items. It’s also an excellent way to engage first-time visitors who may not purchase immediately but will return for your helpful content.

2. Increase Customer Loyalty: By posting regularly, whether it’s product tips or customer spotlights, you show your audience that you value them. Providing consistent value encourages repeat visits and strengthens relationships with your customers.

3. Show Your Expertise: Blogging allows you to demonstrate your knowledge and passion. Answering common questions and sharing industry insights builds trust with your audience. It can also lead to backlinks and partnerships from others in your niche.

4. Boost SEO Rankings: Search engines favor sites with fresh, high-quality content. Blogs help by targeting specific keywords and linking to other pages on your site. At the same time, visitors also tend to stay longer when you have engaging content.

5. Generate Social Media Content: Blogs make it easy to create content for social media. Evergreen posts, like tutorials or gift guides, can be shared repeatedly.

How to Start a Blog for Your WooCommerce Store

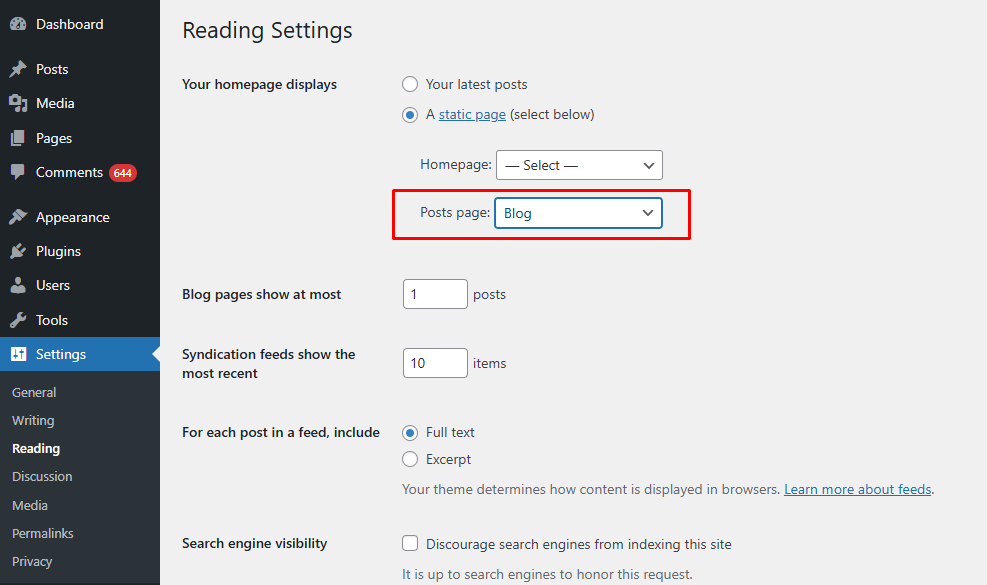

Starting a blog for your WooCommerce store is simple, thanks to WordPress’s built-in blogging feature. Begin by creating a new page in your WordPress dashboard and naming it something like “Blog” or “News.”

Next, go to Settings → Reading, select your new page in the Posts page dropdown, and save the changes.

To create posts, navigate to Posts → Add New, where you can add text, images, and videos using the block editor—don’t forget to include a featured image for your blog feed.

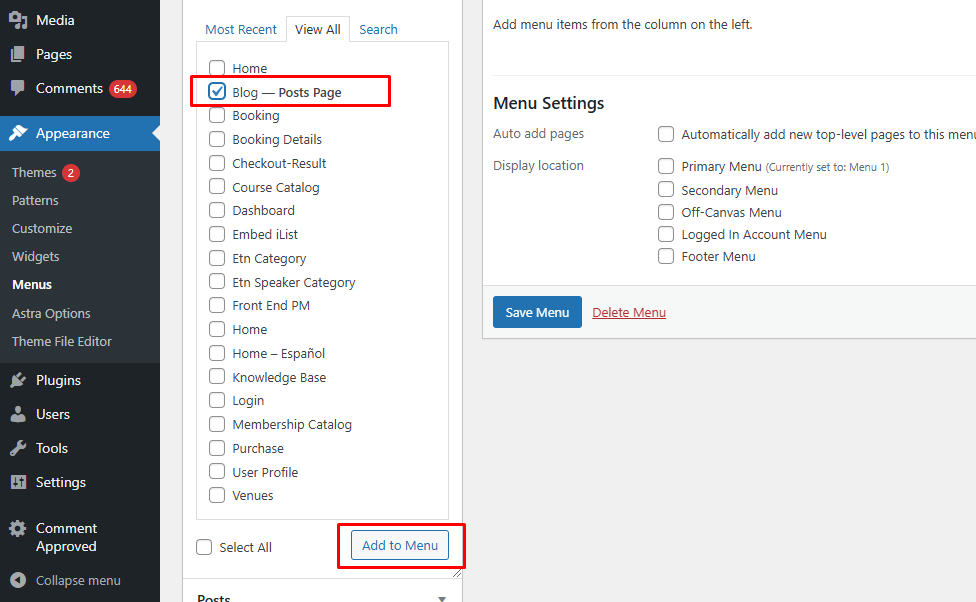

Finally, make your blog easy to access by adding it to your site’s main menu. Go to Appearance → Menus, check the box next to your blog page, click Add to Menu, and save your changes.

Your blog is now live and ready for you to share engaging content with your audience!

Topic Ideas Tailored to Your Niche

For a successful blog, start by keeping a list of post ideas handy and jotting them down as they come to you. Often, brainstorming topics can be more challenging than writing the posts themselves, so this practice helps you avoid writer’s block.

Focus on three to five main categories to organize your content when starting out. Label each post with its relevant category, as this makes it easier for readers to find the content they’re interested in. Finally, use plenty of images in your posts to keep readers engaged.

Creating High-Quality Content

The approach to content creation differs based on whether your store is service-based or product-based, as each has unique goals and customer needs.

For service-based businesses, content is designed to highlight expertise, build trust, and showcase the value of intangible offerings. It focuses on demonstrating how services solve problems or address customer needs effectively.

This type of content includes case studies that showcase success stories, how-to guides that educate potential clients, service walkthroughs that explain processes, testimonials from satisfied customers, and detailed blogs about industry trends or best practices.

On the other hand, for product-based businesses, the content emphasizes the tangible features and benefits of the products. It aims to show how these products solve specific problems or integrate seamlessly into the customer’s lifestyle.

Examples include detailed product descriptions, customer reviews, tutorials on using the products, product comparisons to highlight unique features, and visually rich media such as videos or image galleries to make the products more appealing and informative.

Tips for Creating Shareable Content

- Understand Your Audience: Identify your customers’ interests and pain points. Creating content that resonates with them increases the likelihood of sharing.

- Craft Compelling Headlines: An engaging headline grabs attention and encourages clicks. Ensure it accurately reflects the content and avoid clickbait.

- Use High-Quality Visuals: Incorporate images and videos to make your content more appealing. Visuals can convey information quickly and are more likely to be shared on social media.

- Provide Value: Offer actionable insights, tips, or solutions. Content that educates or solves problems is more likely to be shared.

- Encourage Sharing: Include social sharing buttons and call-to-actions within your content. Making it easy to share increases the chances of your content reaching a broader audience.

Internal Linking Strategies

An internal link connects one page of your website to another. Users rely on links to navigate your website and locate the information they’re looking for, while search engines use them to explore your site’s pages.

As a website owner, you have control over your internal links. Use them wisely to guide visitors and search engines to your most valuable and important pages.

Integrating links from your blog posts to relevant product pages is a great way to use internal links to enhance user engagement and drive sales.

Best Practices for Internal Linking

- Use Clear Anchor Text: When adding links, make sure the text you link (called anchor text) clearly describes the page it leads to. For example, instead of vague terms like “click here,” use something specific like “summer dresses collection.” This helps users understand where the link will take them and provides useful context for search engines.

- Place Links Strategically: Add your most important internal links in prominent places within your content, like near the top or within the main body of your text. Search engines often give more weight to links in these positions, so they’re more effective.

- Keep Links Organized: Structure your internal links to follow a logical flow that reflects your site’s organization. This helps search engines understand how your pages are connected and ensures that the value of your links (link equity) is distributed effectively across your site.

- Review Links Regularly: Check your internal links from time to time to make sure they still work and lead to relevant pages.

Off-Page SEO Strategies

Building Backlinks

Backlinks, also called inbound links, are links from other websites to yours. When a trusted website links to your WooCommerce store, search engines see this as proof that your content is high quality. This boosts your site’s authority and improves its position in search results.

Backlinks can also bring referral traffic, which not only increases traffic but also improves the chances of making sales.

Strategies for Earning High-Quality Backlinks

1. Guest Posting: You can contribute articles to reputable blogs or websites within your niche. In exchange, you can include a link back to your WooCommerce store.

2 . Broken Link Building: Identify broken links on other websites that are relevant to your niche. Reach out to the site owners, informing them of the broken link and suggesting your content as a replacement. This tactic provides value to the website owner while earning you a backlink.

3. Leveraging Influencer Marketing: Engage with influencers in your industry to promote your products or content. When influencers share your content or link to your store, it can lead to high-quality backlinks and increased traffic.

4 . Participating in Industry Forums and Communities: Actively participate in online forums and communities related to your niche. By providing valuable insights and including a link to your store in your profile or signature, you can generate backlinks and drive targeted traffic.

Social Media and Its Role in SEO

Using social media as part of your WooCommerce strategy can significantly improve your SEO. Social platforms help bring visitors to your store by sharing engaging posts, promotions, and updates.

Interactions like shares and comments on social media can also increase your reach. Encouraging user-generated content (UGC), such as reviews and testimonials, adds a personal touch and builds trust with potential customers.

Strategies for Effective Social Media SEO

Complete Your Social Media Profiles

To optimize your social media profiles for off-page SEO, start by completing them thoroughly. Use consistent branding across your profile picture, bio, and banners to reflect your brand identity and make your profiles recognizable.

Include relevant keywords in your bio, descriptions, and posts to enhance search visibility. Add links to your WooCommerce store, blog, or product pages. Additionally, ensure your contact information is accurate and up-to-date to improve local SEO and make it easier for customers to reach you.

Engage in Social Listening

Social listening is the process of monitoring social media platforms, forums, and other online channels for mentions of your brand, products, competitors, or industry topics. It involves analyzing these conversations to gain insights into what people are saying about your business and identifying trends or opportunities to engage.

To engage in social listening, you can:

- Use tools like Google Alerts to track when your WooCommerce store or products are mentioned online.

- Respond to positive mentions to build goodwill and engage with your audience.

- Address negative feedback constructively to improve customer trust.

- Follow conversations about your competitors or industry trends to identify gaps or opportunities where your WooCommerce store can stand out.

Advanced WooCommerce SEO Tactics

Voice Search Optimization

Almost 20% of people globally are already using voice search. As more people use voice-activated devices to shop online, it’s important to include voice search when optimizing your WooCommerce SEO.

To get started, make your content conversational and easy to understand. Use natural language in your titles and descriptions, similar to how people talk. For example, instead of “best running shoes,” include phrases like, “What are the best running shoes for marathon training?”

You should also focus on long-tail keywords—specific phrases that closely match how users ask questions through voice.

Finally, answer common questions in your content. This can be done by adding FAQ sections on your product page.

Local SEO for WooCommerce

Local SEO focuses on optimizing your online presence from local searches, which is especially useful for WooCommerce stores serving specific areas.

To improve local SEO, include location-specific keywords, like city or neighborhood names, in your website content. Also, make sure your store’s name, address, and phone number (NAP) are consistent across all platforms.

Setting Up Google Business Profile

Creating a Google Business Profile is a key step in local WooCommerce SEO. Here’s how to set it up:

- Visit the Google Business Profile website: Sign in with your Google account or create one if necessary.

- Enter Your Business Information: Provide accurate details, including your business name, address, phone number, and website URL.

- Verify Your Business: Google will send a verification code via phone, email, or even physical mail. Enter this code in your Google Business Profile account to confirm your business location.

- Optimize Your Profile: Add high-quality photos, business hours, and a compelling description of your products or services. Regularly update your profile to reflect any changes.

International SEO for WooCommerce

Expanding your WooCommerce store to a global audience requires effective international SEO strategies. This involves optimizing your store for multiple languages and currencies.

Optimizing for Multilingual Stores

To reach customers who speak different languages, make sure your store content is available in their native tongue. You can do this by:

- Using Translation Plugins: Tools like TranslatePress or Weglot make it easy to translate your store content.

- Implementing hreflang Tags: These HTML tags help search engines understand the language and region of your pages.

- Creating SEO-Friendly URLs: Use subdirectories to reflect the language of your content, such as “example.com/es/” for Spanish.

Optimizing for Multicurrency Stores

Offering multiple currency options simplifies shopping for international customers. Here’s how you can set it up:

- Use Currency Switcher Plugins: Plugins like WooCommerce Multilingual & Multicurrency let customers view prices and pay in their preferred currency.

- Automate Exchange Rates: Ensure prices are accurate using systems that update exchange rates in real-time.

- Display Currency by Location: Use geolocation tools to automatically show prices in each visitor’s local currency.

Seasonal and Event-Based SEO Strategies

Seasonal and event-based website content helps you attract more customers during specific times of the year or around special events. These strategies focus on optimizing your store for keywords and promotions tied to those occasions, making it easier for shoppers to find your products when they’re most likely to buy.

For example, during the holiday season, many people search for gifts. If your WooCommerce store sells clothing, you can create a category like “Holiday Gift Ideas” and optimize it for keywords such as “best gifts for Christmas.” This way, when people search for these terms, your store is more likely to show up in search results.

Troubleshooting Common WooCommerce SEO Issues

Improving your WooCommerce store’s SEO means solving technical problems that can hurt your search rankings. Now let’s take a look at some common errors and how to troubleshoot these problems when they occur.

Resolving Crawl Errors

Crawl errors happen when search engines like Google can’t access parts of your website. Common problems include server errors (when the server is overloaded or misconfigured), DNS errors (caused by incorrect domain settings), and robots.txt errors (when search engines can’t read the file that tells them what to crawl).

To fix these, use Google Search Console’s ‘Coverage’ report to find the errors. For server errors, make sure your hosting is properly set up. When it comes to DNS issues, check that your domain settings are correct.

For robots.txt errors, ensure the file is accessible and formatted correctly. Keep checking for errors regularly to keep your site running smoothly.

Fixing Canonicalization Issues

Canonicalization issues happen when the same content is accessible through different URLs. This confuses search engines, making them see duplicate pages and dividing your page’s authority, which hurts your SEO. Common causes include different URL formats (like HTTP vs. HTTPS or with and without “www”) and session IDs or tracking parameters.

To fix this:

- Use Canonical Tags: Add <link rel=”canonical” href=”URL”> to your pages to tell search engines which URL is the main one.

- Set Up 301 Redirects: Redirect duplicate URLs to the main version so all traffic and link values go to the correct page.

- Keep Internal Links Consistent: Always use the same URL format within your site to support the canonical version.

Dealing with Low-Quality or Thin Content

Low-quality or thin content provides little value to users and can harm your SEO rankings. To address this:

- Conduct a Content Audit: Review your site’s content to identify pages with minimal or poor-quality information.

- Enhance Content Quality: Update thin pages with comprehensive, relevant, and engaging information that meets user intent.

- Remove or Consolidate Duplicate Content: Eliminate redundant pages or merge similar content to improve overall quality.

- Regularly Update Content: Keep your content fresh and up-to-date to maintain relevance and user engagement.

Key Metrics to Measure Your WooCommerce SEO Success

- Organic Traffic: This metric indicates the number of visitors arriving at your store through unpaid search results. An increase in organic traffic suggests that your SEO strategies are effectively improving your store’s visibility.

- Conversion Rate: This measures the percentage of visitors who complete a desired action, such as making a purchase.

- Bounce Rate: This represents the percentage of visitors who leave your site after viewing only one page. A high bounce rate may indicate issues with page relevance or load times, which can negatively impact SEO.

- Average Session Duration: This metric shows the average time users spend on your site. Longer sessions typically reflect engaging content and a positive user experience.

- Keyword Rankings: Monitoring the positions of your targeted keywords in search engine results helps assess the effectiveness of your SEO efforts.

SEO Best Practices for WooCommerce

Staying Updated with Google Algorithm Changes

Google frequently updates its search algorithms to enhance its search result relevance. For instance, the November 2024 core update impacted global search rankings. It emphasized the need for high-quality, relevant content that’s mainly written to provide value for its readers. So it’s best to continuously be aware of these updates and adjust your website accordingly.

Use A/B testing for Page Layouts, Content, and Calls to Action

A/B testing is a method where you compare two versions of a webpage to see which one performs better. This is important for optimizing your WooCommerce store because it helps you understand what works best for your audience.

Testing different layouts, headlines, or calls to action (CTAs) can reveal what encourages visitors to stay on your site, click on products, or complete purchases.

Adopting AI and Automation Tools for SEO

Artificial intelligence and automation are transforming SEO by streamlining tasks and enhancing data analysis. Google’s integration of AI, such as the Gemini model, aims to provide more personalized search results.

To leverage AI for your WooCommerce SEO:

- Utilize AI-Powered SEO Tools: Platforms like Semrush offer AI-driven features for keyword research, content optimization, and competitive analysis.

- Implement Chatbots for Customer Engagement: AI chatbots can improve user experience by providing instant support. This potentially increases dwell time—a positive SEO signal.

- Automate Routine SEO Tasks: Use automation tools to handle tasks like monitoring backlinks and tracking keyword rankings. This way, you can focus more on the strategy side of your WooCommerce SEO.

Improving Your WooCommerce Store Security

Even the best WooCommerce SEO strategies won’t matter if your website has security vulnerabilities. According to statistics, around 30,000 websites worldwide fall victim to hacking every day.

A hack can hurt your rankings, especially if you can’t fix the problem quickly. Search engines avoid directing users to unsafe or compromised sites. So fortifying your website security is a must.

Here are some tips to make your WooCommerce store more secure:

1. Choose a Secure Host

Your web host plays a crucial role in keeping your WooCommerce store secure, as it stores your website’s content, WordPress core files, and database. A reliable host ensures these elements are protected from hackers and malware.

Look for features like:

- SSL certificates to secure sensitive customer data like addresses and phone numbers.

- Regular backups so your site can be fully restored in case of any issues.

- Attack monitoring and prevention to detect malware in your files and database quickly.

- Server firewalls to block unauthorized access to your files.

- Up-to-date server software like PHP and MySQL for optimal security and performance.

- File isolation to prevent malware from spreading to other sites or folders on the same server.

- 24/7 customer support to assist you whenever needed.

GreenGeeks, the eco-friendly hosting provider, takes additional steps to protect your account with advanced security measures:

Login Verification Email

GreenGeeks sends login alert emails for all successful logins to your dashboard. Recently, they’ve added a new layer of security: a one-time verification code for unrecognized devices. If you log in from a new device or browser, you’ll need to complete a one-time verification via email, phone, or SMS. Once verified, the device is recognized, and future logins proceed as usual.

Two-factor Authentication (2FA)

GreenGeeks supports full 2FA, the most secure way to protect your account. With 2FA, a one-time code generated on your phone or tablet is required for login. Even if your login credentials are compromised, an attacker won’t be able to access your account without your device.

User Administration / Account Access

GreenGeeks makes it easy to grant account access to multiple users. This feature is perfect for teams or developers who need to manage hosting services, access cPanel, create SSL certificates, or contact support.

2. Use Strong Passwords

While your hosting provider plays a big role in security, it’s also your job to keep your accounts safe. Start by using strong, secure passwords for all accounts linked to your WooCommerce store, including your WordPress site, hosting platform, and domain provider.

Here are some tips for creating secure passwords:

- Use a different password for every account, especially for your WordPress admin.

- Include a mix of capital letters, small letters, numbers, and symbols in your passwords.

- Avoid using easy-to-guess words, birthdays, anniversaries, or common phrases.

- Make your password long and complicated—longer passwords are harder for hackers to figure out.

3. Use SSL

An SSL (Secure Socket Layer) certificate keeps your customers’ information safe by encrypting sensitive data like contact forms and credit card details on your e-commerce website. It’s essential for your store’s security and also helps improve your SEO.

There are a few ways to get an SSL certificate, and many hosting providers include it in their plans for free.

If your host doesn’t offer a free SSL, you can buy an SSL certificate from domain registrars or third-party providers like DigiCert or https://www.ssl.com/.

Types of SSL Certificates:

- Single domain SSL: Secures one website.

- Wildcard SSL: Secures a website and its subdomains.

- Multi-domain SSL: Secures multiple websites.

Once you purchase an SSL certificate, follow the provider’s instructions to install it on your server. It’s a straightforward step to protect your customers and your business.

4. Use Security Plugins

Using a high-quality WooCommerce security plugin is one of the best ways to protect your online store. There are many excellent security plugins designed for WordPress and WooCommerce that can safeguard stores of all sizes.

Here are some of the top features you should look for when choosing a security plugin:

- Real-time backups: Automatically save your site, orders, and files in real-time so you can easily restore the latest version even if your site goes down.

- Malware scanning: Scan your site for malware and vulnerabilities that could harm your store. Some plugins also offer one-click fixes for common threats.

- Downtime monitoring: Receive alerts if your site goes offline so you can quickly address potential hacks or technical issues.

- Anti-spam tools: Block spam from comments, contact forms, and other site interactions.

- Activity logs: Keep track of all changes on your website, including who made them and when so you can quickly spot suspicious activity.

- Website firewall: Protect your site from malicious traffic and potential attacks.

- Brute force attack protection: Safeguard your site against brute force login attempts that could compromise customer data.

- Two-factor authentication (2FA): Add an extra layer of security by requiring a one-time code in addition to a password for logins.

Popular WooCommerce Security Plugins

- Sucuri Security: Offers website firewalls, malware scanning, and real-time monitoring.

- Wordfence: Includes a robust firewall, malware scanner, and login security features like 2FA.

- All In One WP Security & Firewall: A free plugin with features like spam protection, database security, and firewall rules.

- MalCare Security: Focused on one-click malware removal, login protection, and firewall functionality.

5. Use Verified Payment Gateways

Using verified payment gateways on your WooCommerce site is important for keeping your store secure and ensuring smooth transactions.

These gateways follow strict standards like PCI DSS to protect customer information, reducing the chances of fraud and data theft. They also make your store more trustworthy, which can lead to more sales.

Popular options like Stripe, PayPal, Square, Authorize.Net, and Amazon Pay offer strong security features for your payment transactions.

6. Back Up Your Data

Backups enable you to restore your site to a previous state in case of issues like plugin conflicts, updates causing crashes, or cyberattacks.

Methods to Back Up Your WooCommerce Website

Using Backup Plugins:

Plugins like Jetpack VaultPress Backup offer real-time backups, off-site storage, and one-click restores. Duplicator Pro allows for scheduled backups, cloud storage integration, and disaster recovery options.

Through Your Web Hosting Provider:

Many hosting providers offer backup services as part of their plans or as add-ons. These backups are typically stored on the same server network for shared plans, although you can also use dedicated backup solutions for enhanced security.

Manual Backups:

Manually download your website files via FTP and export your database using tools like phpMyAdmin.

FAQs about WooCommerce SEO

Start with keyword research tools like Google Keyword Planner or Ahrefs to find terms your audience searches for. Focus on keywords relevant to your products and prioritize long-tail phrases with lower competition.

A meta description is a short summary of a page that appears in search results. It helps users decide whether to click your link, and compelling descriptions with keywords can boost click-through rates.

Yes, plugins like Yoast SEO or Rank Math help manage technical SEO, optimize content, and generate sitemaps, simplifying the optimization process for WooCommerce.

A sitemap lists all the important pages on your site, helping search engines index them more effectively. Submitting your sitemap to Google and Bing ensures your content is discoverable.

Use descriptive category names, add keyword-rich descriptions, and include internal links to related products. This improves navigation and helps search engines understand your site structure.

Reviews add fresh content to product pages, which search engines value. They also increase customer trust, leading to better conversions and higher rankings.

Fast-loading sites provide a better user experience, which is a ranking factor for Google. To improve speed, you can optimize images, use caching, and choose a reliable hosting provider.

Final Thoughts

WooCommerce SEO is an effective tool for driving traffic and growing your online store, but it requires a balance of both on-page and off-page strategies. From optimizing your product pages and images to building backlinks and leveraging social media, each step works together to improve your store’s visibility and rankings.

While the process may seem challenging at first, the long-term benefits—higher search rankings, more traffic, and increased conversions—make it a worthwhile investment.

Implement these strategies consistently and unlock your store’s full potential—your customers are waiting to find you!